Misinformation and disinformation have become pervasive issues in today’s digital world, posing significant challenges in distinguishing truth from fiction. To unravel this complex web, we need to understand the nuances of misinformation and how technology can be harnessed to combat it effectively.

The Misinformation Spectrum

Misinformation isn’t a monolith; rather, it spans a spectrum of deceitful content. Understanding these shades of misinformation is critical:

- Intentional Disinformation: Content generated by bots or troll farms, primarily for revenue generation. Often, these videos present misleading headlines or visuals that contradict the actual content, aiming to maximize engagement and profits.

- Clickbait: Clickbait content is intentionally salacious, often of low quality, and while not always violating company policies, it frequently misleads users through false context or exaggerated claims.

- Opinionated Videos with False Ideology: These videos, created by regular individuals, endorse false ideologies. Addressing them is challenging, especially if they don’t violate community guidelines.

- Satire or Parody: Content designed for entertainment, with no harmful intent. However, it can potentially fool viewers due to its deceptive semblance.

- Authoritative, High-Quality News: Trustworthy news content intended for news-seeking queries, clearly labeled as ‘News.’ While occasionally facts may be misreported, the intent is to provide authoritative and high-quality news.

Tech companies have made strides in algorithmically determining significant events, but the accuracy in identifying and sourcing information around developing details, remains a hurdle, leaving room for bad actors to spread disinformation.

The Evolution of Recommendation Systems: Illuminating the Path Forward

In the ever-evolving digital landscape, recommendation systems play a pivotal role in shaping our online experiences. Initially designed to enhance user engagement and satisfaction, these systems have undergone a transformative journey to better understand user preferences and behaviors.

Early Days: The Clickbait Predicament

In the early days of the Internet, recommendation systems relied heavily on analyzing user clicks to optimize content. However, this approach inadvertently encouraged creators to produce sensational clickbait, often misleading users with provocative headlines. Users would click on a video, only to realize that the content didn’t align with the enticing banner.

Raising the Bar: Quality Over Clicks

Recognizing the pitfalls of click-based algorithms, tech companies pivoted towards evaluating the quality of user engagement. Instead of merely analyzing clicks, they began considering factors like the amount of time users spent watching a video and whether they watched it to completion. This shift aimed to ensure that users not only clicked on content but found it genuinely satisfying.

Harnessing User Feedback: A Paradigm Shift

However, measuring satisfaction based solely on watch time wasn’t foolproof. Long viewing times didn’t always equate to a positive user experience. Tech companies realized the need for direct user feedback to gauge satisfaction accurately. Surveys and feedback mechanisms were introduced to gather users’ opinions on recommended content, enabling the refinement of recommendation algorithms.

The Challenge of Veracity: Raising the Bar Higher

Ensuring that recommendation systems present accurate, verifiable information became a pressing concern, especially in domains like science, medicine, news, or historical events. Tech companies started imposing a higher standard for videos promoted through recommendations. The focus shifted to providing content from authoritative sources, particularly in critical domains where credibility and accuracy were paramount.

The Complex Terrain: Why No ‘Silver Bullet’ Exists

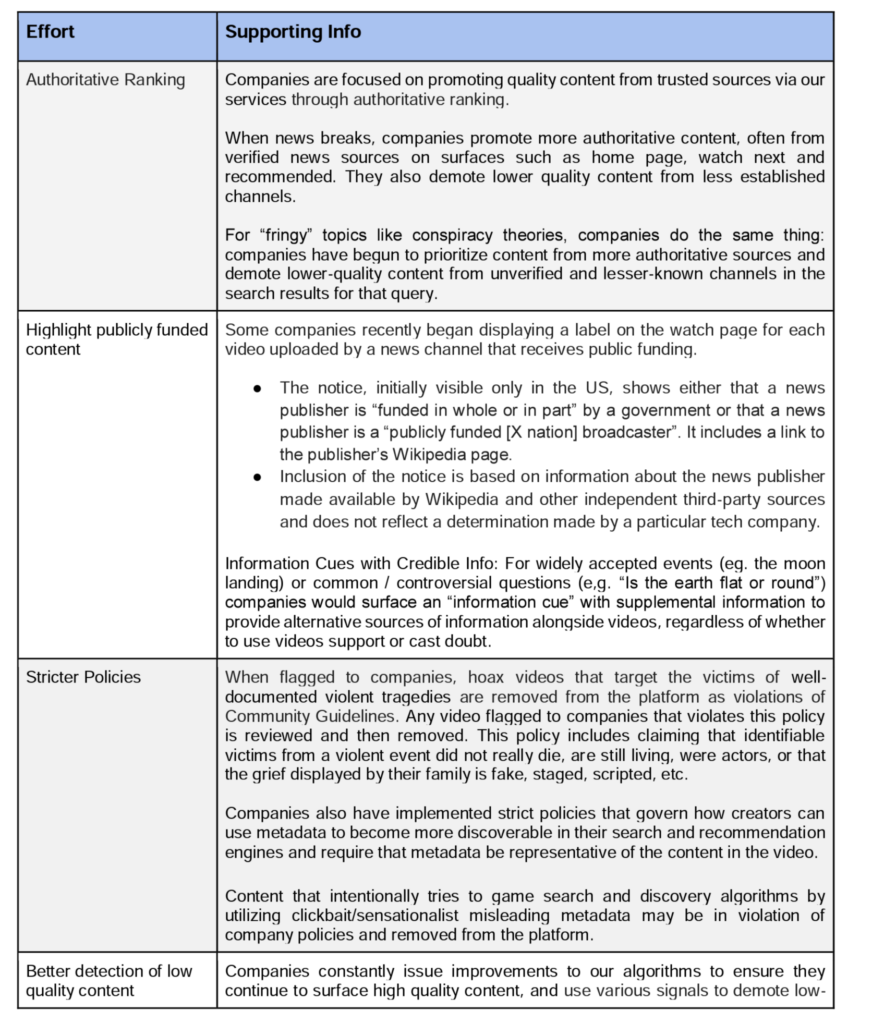

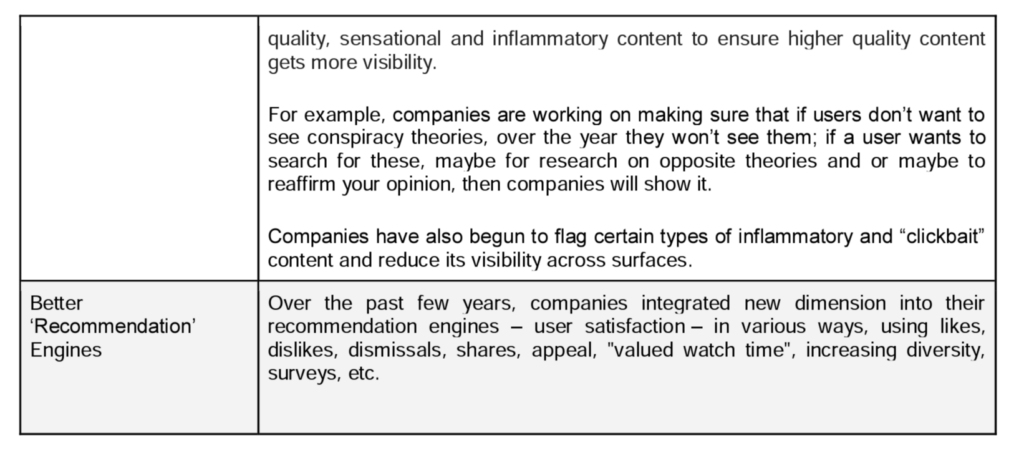

Combatting disinformation is far from simple. Determining the authenticity and intent behind content, especially related to current events, is a complex task for both humans and technology. People and technology have to work hard to figure out the authenticity and the reasons behind such content, especially when it’s related to important things like our health. This is an example of how disinformation can be tricky to combat. As shown in Table 1.0, to combat disinformation effectively, tech companies need to adopt a triad of strategies:

- Make Quality Count: Algorithms ensure fair treatment of websites and content creators, aiming to prioritize usefulness based on user testing, rather than fostering specific ideological viewpoints.

- Counteract Malicious Actors: While algorithms can’t determine the truthfulness of content, they can identify intent to manipulate or deceive users. This calls for greater transparency in content operations.

- Give Users More Context: Various tools, like ‘Knowledge Panels,’ ‘Full Coverage,’ and ‘Breaking News’ shelves, equip users with contextual information to make informed decisions about the content they consume.

A Collective Responsibility

Ensuring a reliable and high-quality digital experience is a shared responsibility. Tech companies are constantly evolving their products to prioritize quality, counteract malicious actions, and provide users with valuable context. Beyond product improvements, collaboration with civil society, researchers, and staying ahead of emerging risks are vital in combating misinformation and disinformation. Together, let’s strive for a trustworthy digital world.

The 2023 edition of the Network Readiness Index, dedicated to the theme of trust in technology and the network society, will launch on November 20th with a hybrid event at Saïd Business School, University of Oxford. Register and learn more using this link.

For more information about the Network Readiness Index, visit https://networkreadinessindex.org/

Ayse Kok Arslan is a member of the SSIT Standards Committee for The Institute of Electrical and Electronics Engineers, and a member of the Generative AI Working Group at the National Institute of Standards and Technology (NIST).