Sylvie Antal, Policy Research Associate

Immersive technologies, including augmented reality, virtual reality, and mixed reality have the potential to transform our lives in the coming years. However, the features that make XR such a powerful, transformational technology are also the features that present the most challenges for user safety, privacy, and well-being.

What is immersive technology?

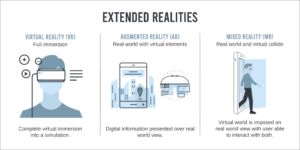

Immersive technology, also referred to as extended reality (XR), is an umbrella term describing technologies that merge the physical and virtual worlds. This includes augmented reality (AR), virtual reality (VR), and mixed reality (MR).

Immersive technologies have the potential to transform nearly every facet of our life, including how we communicate, work, and learn. They can provide realistic training tools to simulate high risk experiences, such as performing complex surgeries and preparing for combat. They also hold therapeutic potential for those with injury, disabilities, or trauma. Some companies are taking advantage of increased demand for remote services and offering XR shopping experiences. XR is elevating entertainment, allowing users to experience the energy of a sports arena or concert from their home.

Immersive technologies are also being adopted by social media companies aiming to “upgrade” the user experience. Recently, Facebook announced its rebranding to Meta with plans for the upcoming “metaverse”, a new platform that extends beyond the capabilities of traditional social networks by creating a new digital world parallel to our current physical one.

As cost of production decreases and pace of development increases, we move closer to the cusp of immersive technology’s widespread adoption and use. Virtual worlds are no longer a sci-fi fantasy portrayed only in futuristic television shows, but rather a real possibility, not to mention a huge market opportunity, evolving right before us.

So how exactly do these technologies work with our bodies to create an immersive experience? And what are the implications for our safety, privacy, and well-being?

How do immersive technologies work?

In short, XR technologies use behavioral and anatomical information measured by sensors and infrared cameras to capture a user’s reaction to stimuli over time, providing insight into their physical, mental, and emotional state to enhance their experience. Their unique capabilities rely on two distinct sets of features: components that measure bodily functions, and components that produce stimuli.

XR technology hardware collects data from several different biometric indicators. Sensors map the motion of the user’s head, hands, fingers, and feet. Eye tracking measures eye movement, revealing which objects are visually salient to the user. Facial expression analysis measures emotional response through facial muscle tracking. Galvanic skin response, or changes in sweat gland activity, provides further insight into the intensity of the user’s emotional state. Electroencephalogram (EEG) data measures the user’s brain wave activity and cognitive workload, revealing how challenging they find a particular task. Electromyography (EMG) senses muscle tension, detecting micro-expressions when performed on the face. Electrocardiogram (ECG) data detects variability in the user’s pulse or blood pressure when responding to a stimulus. External devices, like gloves and controllers can also pair with the hardware to provide richer data on user movements.

The hardware then uses these signals to produce the appropriate visual stimuli based on the user’s movements and responses. These stimuli combine with features like panoramic field of view, spatial mobility, and three-dimensional audio to create the completely immersive user experience.

A new kind of privacy concern

The same data inputs that allow XR to be a powerful, transformational technology are also the data that present the most potential for harm. The physiological realness elicited by these technologies makes users respond to virtual simulations in ways similar to real situations. The biometric data captured by the technology shows involuntary responses, which means it is impossible for the user to consent to its collection. Further, when fused together, these data streams can be just as personally-identifying as fingerprints or vocal patterns. They can also reveal a user’s interests, likes, and motivations.

New innovations surface existing challenges

The sheer amount of information gathered by XR devices, and what can be inferred from that data, raises privacy concerns on several fronts. With large tech and social media companies investing in the immersive technology space, the closer ties between social media platforms and XR build on users’ existing worries about identifying information being sold to third-parties. Given that XR data streams can reveal not only one’s present physical or emotional state, but also what external triggers are provoking those responses, this data is highly valuable to advertisers. With current legal protections covering only identifying information, there rests the potential for companies to be susceptible to another breach similar to Cambridge Analytica.

Unfortunately, the risks go beyond just violations of consumer privacy. Eye tracking, in particular, can indicate a wide range of health implications, like whether a user is prone to developing illnesses like dementia, schizophrenia, Parkinson’s disease, ADHD, and more. The availability of such information was not taken into account during the drafting of present health privacy laws. In fact, in the U.S., direct-to-consumer XR and neurotechnology companies are not bound by HIPAA regulations, even though the data they collect is as sensitive as protected medical information. Many have begun to imagine what might happen if those results were to become available to third parties, like insurers.

XR technologies create a sense of embodiment (feeling like a virtual body is your physical body). How our brains recall being in a virtual environment is similar to the way we create memories of offline experiences. Thus, virtual social experiences may take a toll on the user’s mental health, especially when considering the violence and harassment that have come to characterize many online social spaces. Experiencing traumatic events such as a car accident in virtual reality may produce a post-trauma stress response similar to one elicited from a car accident in real life. The growing number of reports of sexual assault on XR platforms present yet another category of serious concern.

Mitigating adverse outcomes

While immersive technologies present many of the same concerns as their predecessors, their psychological and biometric aspects set them apart and make them more difficult to regulate. Despite the novelty and ambiguity of these challenges, what can companies, designers, developers, and regulators do to mitigate adverse outcomes from XR?

Given that rapid growth of technological capability continues to outpace the development of regulatory frameworks, prevention through design remains a promising avenue. XR developers, and all involved in its creation and dissemination should be aware of its principles, values and potential consequences to society. Designers should use such awareness to establish norms through design, setting expectations of how to behave and what to expect from others, as well as how to penalize users who violate the norms of behavior. Further, determining content moderation rules for XR communities is all the more pressing. Are existing content moderation frameworks and approaches adequate? Or will new frameworks be needed altogether?

Another approach to mitigating adverse outcomes is fostering improved digital literacy. Perhaps an immersive world, with new concerns like deep fakes, will even require reimaging the meaning of digital literacy altogether. Some scholars suggest the creation of a content-based rating system that would provide users with information to make decisions about what type of immersive experiences they wish to partake in. Others place more responsibility on platforms to ensure that users possess a genuine understanding of how their data is being collected, applied, stored, and used.

Moving forward towards an immersive world

Given the spike in investment and development, it’s important to consider how these technologies will continue to evolve in coming years. Some companies are already developing hands-free versions of their products that opt for motion tracking using predictive AI, or combining XR with neurotechnology to directly capture neuron activity.

Considering the sensitivity of information gathered by immersive technologies, discussion surrounding which methods of data collection and use are considered unethical continues. We might be able to recognize these boundaries after they have already been crossed, but it’s hard to predict what that looks like or when it might happen. Ensuring that human rights can have a formative role in the growth of immersive tech can help promote accountability not only for companies to innovate responsibly, but for governments to protect their constituents. Now is the time to begin building awareness, accountability, and expectations for proactive approaches, in the best-interest of users.

Sylvie Antal is a Policy Research Associate at Portulans Institute with prior experience in digital privacy issues relating to minors and vulnerable populations, as well as in consumer education and digital literacy. She holds a Bachelor’s degree in Information Science from the University of Michigan’s School of Information and a Masters degree in Human-Computer Interaction.